How to Review Pull Requests, Effectively, While Still Being a Nice Person

This guide will attempt to show you how to effectively review Pull Requests (PRs) while still being a nice person. This guide is aimed at junior developers that have just gotten a task of reviewing a PR for the first time. More senior developers can also refer to this guide as a refresher; and I will be referring to this guide in the future when I need a refresher.

This guide is centered around Git but is applicable to other version control systems like Mercurial. However I’ll be specifically talking about the web interface of GitHub.

Step 1: Take a look at the title.

Sometimes the title says everything you need, something like “Move the navbar up 1px.” With a title like that you hardly even need a description. But most of the time the title will give you an idea of what the PR is about and we’ll need to read the description as well. So we’ll move on to the next step.

Step 2: Read the description.

The description should give you all the information you need to determine what the goal of the PR is. If it’s not clear what this change is or why it’s needed, reach out to the author of the PR in a direct message in another channel. They’ll be much more receptive and would probably really like the chance to tell you about it. If they give you enough information where you understand it, add a comment that clarifies it for anyone else reading. Start it with “spoke offline and …”.

The last option should be to leave a comment asking this. The reason is, it’s a public comment and it might be taken the wrong way.

Step 2a: Read the comments as well.

The questions that you have may have been answered already.

Step 3: Read the Code

In order to find out what the code does after you find out what the code is attempting to do you have to determine if the code actually does this. This is the first part of reading the code. The second part of reading the code is to find out where the changes are specifically when you go to test the code.

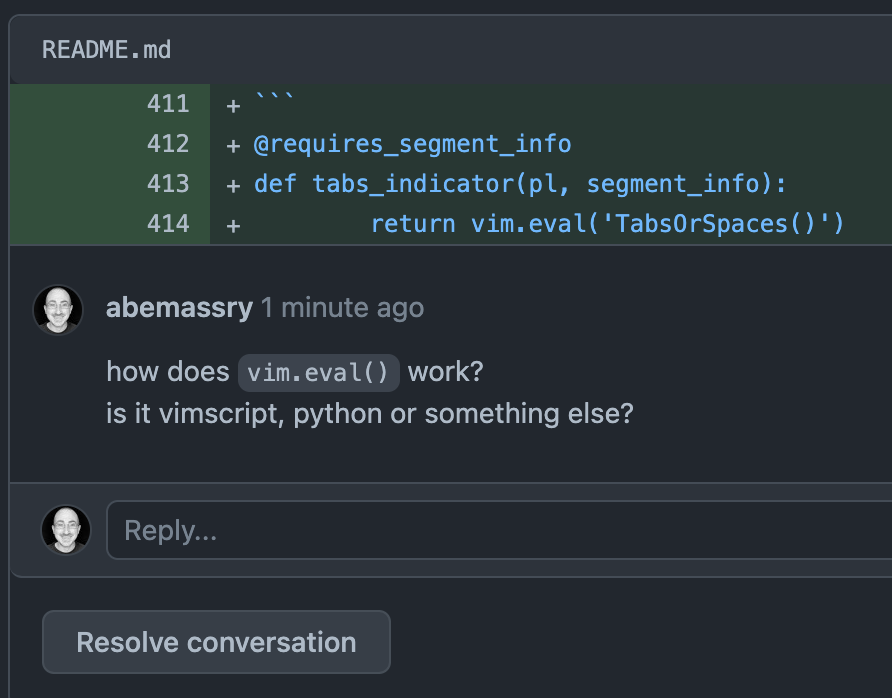

Now at this point if there is something in the code that you don’t understand how it works it’s perfectly fine to write a comment saying something like: “I don’t understand how this works” or “How does this section work?” You can also highlight and use markdown for specific parts. If there are large sections of the code that need explanation then you should probably pair with the author to explain the pieces, if you’re able, or even better screenshare. This can also be done after running the code.

Step 4: Run the code

To really evaluate the code you’ll need to pull the code down and run it. The GitHub CLI makes this easy and there are other tools to make it easy to pull down someone’s branch.

Hopefully you and the team have a development environment set up that makes this easy. If not, that’s an area of improvement. In order to truly evaluate the PR and not just give it a rubber stamp (merge without looking) the code needs to be fully read, run, and evaluated.

Since you’ve read the code and the title and description it should make sense where to look when running the code to see what the behavior is. If it fixes something make sure it’s broken first, then pull the code down and make sure the code fixes the broken thing. If there is an addition make sure it does what the description, code, and title says it’s supposed to do. If there’s a change or non behavioral refactor, check where the changes might be.

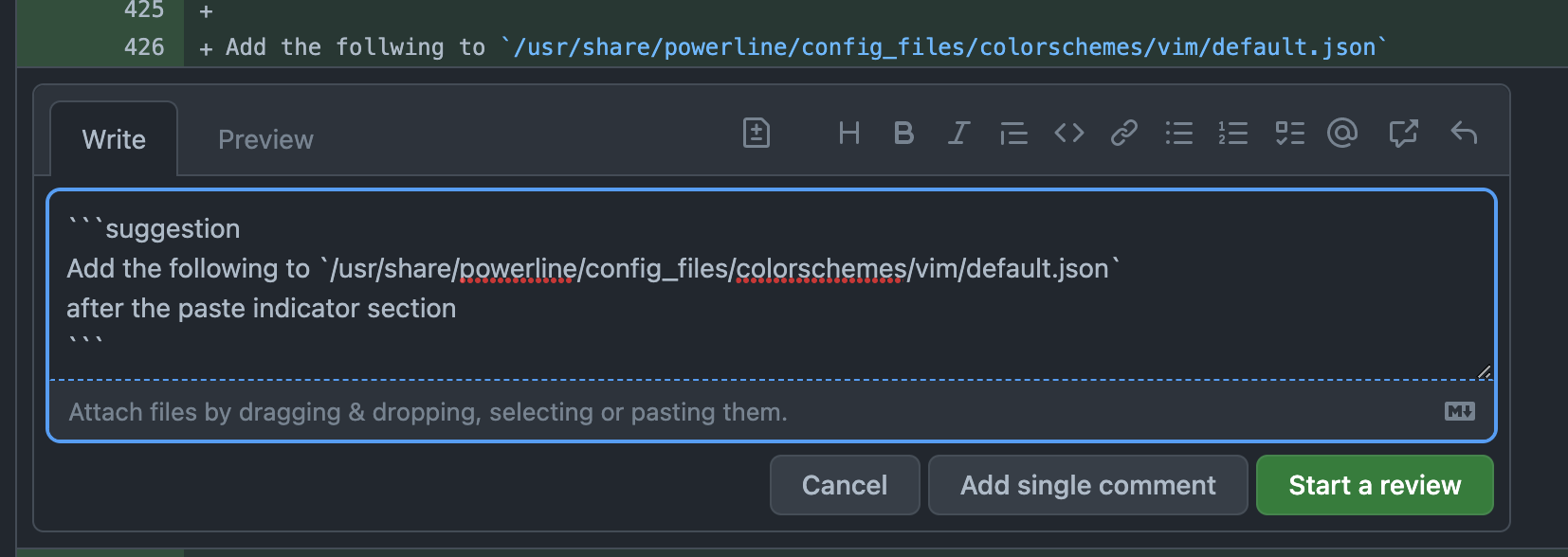

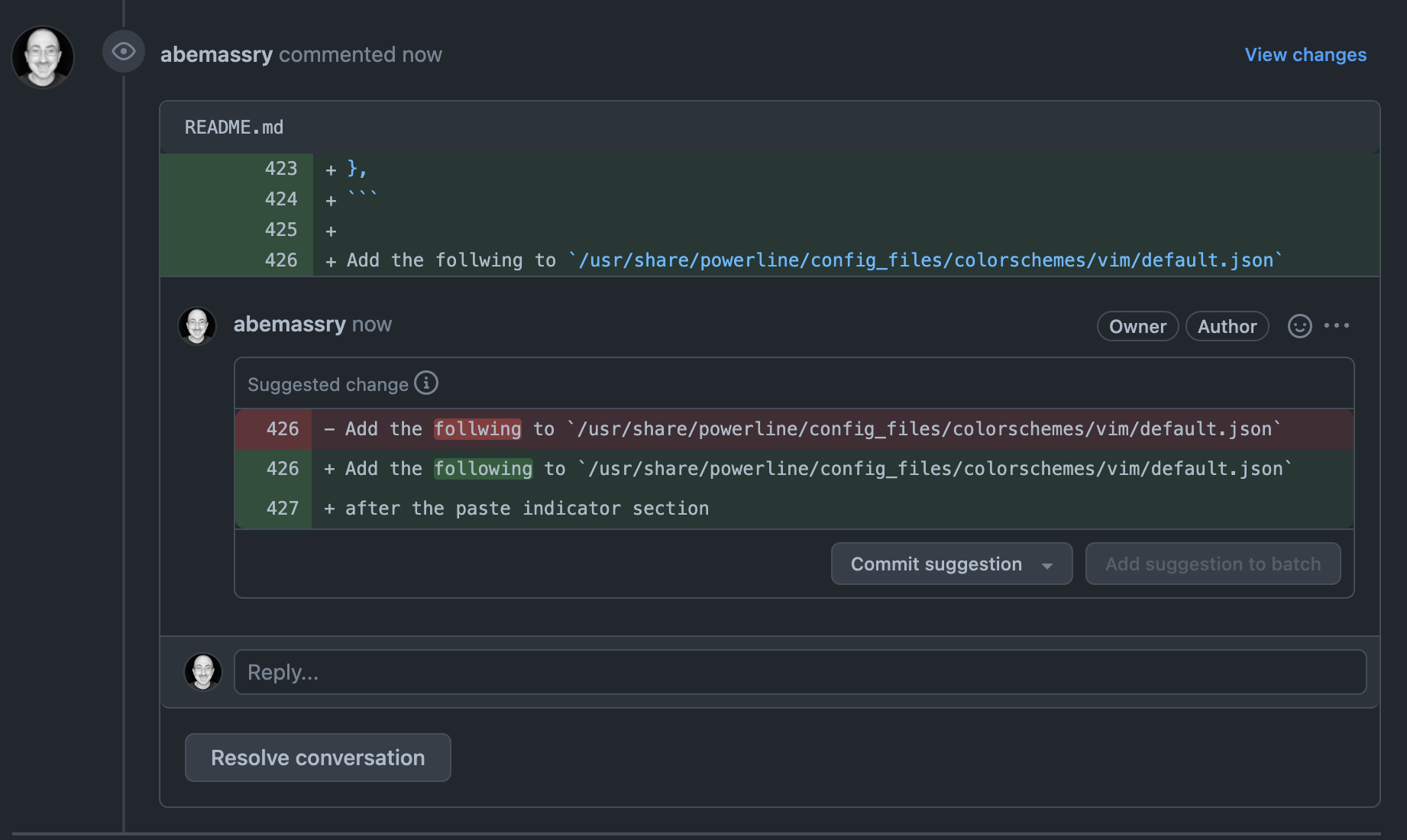

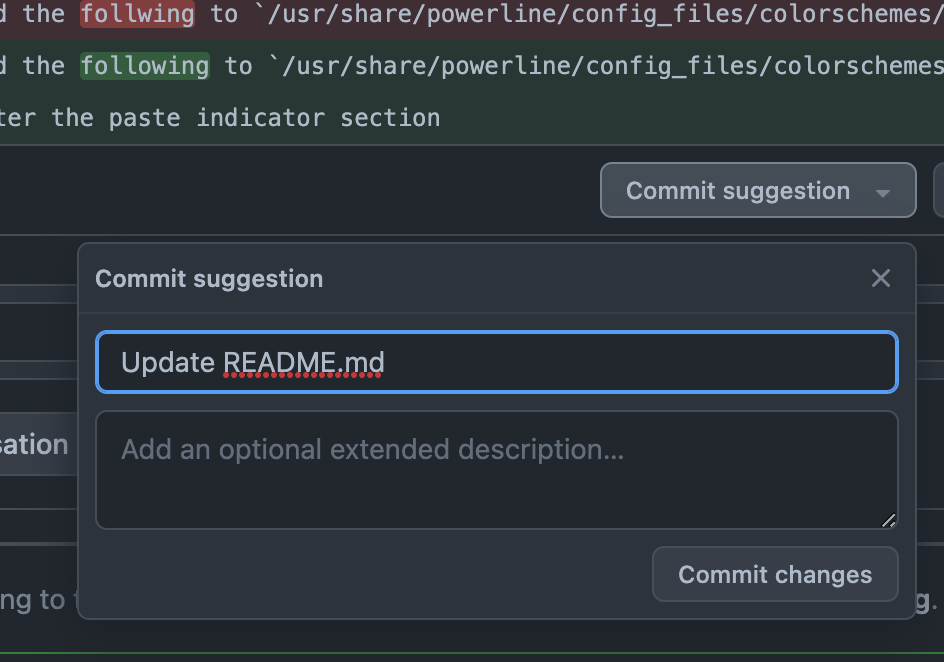

Step 4a: If you find a problem

When running the code you might find an issue, the most helpful thing you can do is figure out where the problem is, by doing a little debugging you might be able to help find out where the problem is. Very often it’s something small that has an exact location in the PR where the problem occurs, after debugging the problem you might be able to find a fix. If you do find a fix, suggest a change inline in the GitHub web interface. This makes it super easy for the author because they only have to click 2 buttons to accept your change. You can also provide them with how you ran the code, what you saw, and how you changed it.

Suggest Changes Button

.png)

Suggesting Changes

Suggested Changes

The author committing the suggestion

If you can’t determine where in the code the problem is occurring, let them know and provide full details on how they can reproduce what you found. After leaving all of the info you might want to reach out to them with a direct message in case they want to pair with you so they can see the issue happening in real time.

Step 5: Re-read the code

You read the code the first time, you checked everything before, title, description, etc. you’ve very familiar with this PR. You’ve even run the code and it works, might as well say “looks good to me” (LGTM) and hit the merge button (don’t do this). This would be wrong to do at this point and you’d miss out on a thorough evaluation of the code and a learning opportunity for yourself.

Now that you’re familiar with the code and you’ve run the code and know what it does, take a second detailed look at it. Figure out which pieces do what. While you’re reading it at this point several items might appear that pop out at you now, that you might not have seen before. Things that might not be a problem now might become a problem in the future. Global variables, scoping issues, confusing naming issues, off by one errors, implicit variable declaration (if that’s a problem for your codebase), incorrect type casting (ints vs floats), freeing resources (memory, objects, sockets, etc.), incorrect bracing around if statements that works now but is a problem waiting to happen (or works only on the happy path), regex that misses some edge case, using after freeing, etc.

If everything looks good then do a quick summary of everything you’ve tried and run and evaluated and state it should do what the PR says. Write this in the review. You’re safe to approve and/or merge the code and deploy it if you have the power.

If the codebase supports unit tests, integration tests, and end-to-end tests those should be included as well and they should be run as well. If there’s an automated way that they run, that’s good too, but for a code review, don’t rely on them. Pretend they don’t exist if you have to, that way you can truly evaluate the code because at the end of the day, the customers or end-users or game players, or people other than the team will be using the real code that you helped evaluate and/or write.

With all this negativity it’s also important to look at the positive aspects. What did the author write that was innovative? How did they solve this specific problem? What takeaways can you see and remember for yourself for the future? This is another learning opportunity, one of many, on your development journey.

Note on direct messaging (DM)

Use whatever format the team is comfortable with (email, Slack, Discord, etc), if in doubt DM first and discuss what you or the author will be posting on the GitHub web interface, remember the GitHub web interface is public and emails are sent out for public activity, so even though you can edit something that’s posted there the original text will still be available.

In a written medium sometimes you miss out on tone of voice.

When working on open source software sometimes the web interface, or mailing list, is the only option. So try to be as polite as the written form allows. And take others’ messages and feedback with grains of salt.

Note on pairing

If your team’s culture supports pair reviewing it really helps speed up the review process, especially for large PRs.

Note on Screen Sharing

I and teams that I’ve worked on and people I’ve worked with have found that screen sharing is indispensable. Screen sharing is even more effective at code review when done on separate computers using screen sharing rather than shoulder to shoulder for the simple fact that you can both see the screen better.

If you’re worried about the bandwidth involved in screen sharing some of the most productive screenshares I’ve been on have been using tmux with both people logged into one machine and calling on the phone. I was using a cell phone but the same thing could have been done on a landline, or the Plain Old Telephone System (POTS). Nowadays there’s a plethora of screen sharing options, Zoom, Slack, Discord, Twitch, obs, ping.gg, Pop, vscode live share, Google Meet, even open source self hosted video chat / screensharing apps like tlk.

Conclusion

If you’re reading this and thinking “by following this guide it will take forever to review a PR!”, you’re right. If a PR took a week to write it’s not going to get a thorough review in 20 minutes, set aside several hours to review. You’ll catch more bugs before they hit prod, you’ll learn more, and the time between opening a PR to getting it merged and deployed will actually shrink.

Tip to managers and senior developers

If you’re a manager or a senior developer that can suggest work roles, consider assigning someone (if they want to) or yourself (volunteer) to only do reviews for a week or two if you envision a lot of PRs being generated that week. If programming is truly a team sport and we’re all on the same team then we should succeed together too.